HashMap is used widely in programming to store values in pairs(key, value) and also for its near-constant complexity for its get and put methods. To access the value we need a key. But have you ever wondered, how it functions internally? How the keys and values are stored? What are the things that decide on its performance?

In this article, we are going to discuss various things about the working of HashMap and the things involved in it.

If someone asks “How HashMap works?”, the straight forward answer will be “On the principle of Hashing”.

What is Hashing?

Hashing is simply assigning a unique value to an object based on some formula/algorithm.

“Hash function should return the same hash code each and every time when the function is applied on the same or equal objects. In other words, two equal objects must produce the same hash code consistently.”

The hashcode is generated by the hashcode() method defined in Object class. This function produces hash code by typically converting the internal address of the object into an integer. Thus it produces different hash codes for different objects.

If we need equal hash codes for equal objects, refer here. This is achieved by overriding equals() method of Object class.

Now, we know about Hashing. But still, we don’t know how the key and values are stored in HashMap.

The internal structure

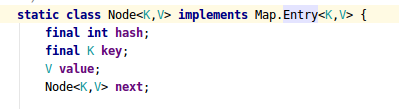

The structure of a Single Entry will be like this.

HashMap maintains an array of such nodes.

Now we know how the hashMap looks internally. It is basically an array of nodes where each node is a LinkedList which contains a key, value, hash value, and the link to the next node in case of collision.

We will get to the collision soon. Now we will see how the put and get methods work?

How put(K key, V value) works?

First of all, the key object is

checked for null. If the

key is

null, the

value is stored in

table[0] position. Because hashcode

for null is always 0.

Then on the next step, a hash value is calculated using the key’s hash code

by calling its hashCode() method. This

hash value is used to calculate the index in the array for storing

Node object.

Now comes the main part

We know that two unequal objects can have the same hash code value, how two different objects will be stored in the same array location called bucket.

You already know the answer. The answer is LinkedList.

If an object is already stored at an index, its next is called. If it is null, the current object becomes the next node of the LinkedList. If not, the same is repeated until we encounter the null. I mean we traverse till the end.

Then, how the update works?

I know you start to wonder, what if I want to update the value of an existing key?

Here, the hash will be the same for the same keys and it will give the index of where the object is stored. While iterating the LinkedList of that specific hash, we call equals() method on key for equality check, and thus, it will update the value of the same key.

The get(K key) methods work the same way like put. First, it will find the index the key is located and calls equals for fetching the value of the Key while iterating the linkedList.

Performance improvement from Java 8

As part of the work for

JEP 180, there is a performance improvement for HashMap objects where there are

lots of collisions in the keys by using balanced trees rather than linked

lists to store map entries. The principal idea is that once the number of

items in a hash bucket grows beyond a certain threshold(Current

TREEIFY_THRESHOLD = 8), that bucket will switch from using a linked list of

entries to a balanced tree. In the case of high hash collisions, this will

improve worst-case performance from

O(n) to

O(log n).

This is surely a good improvement, but what happens when again if we remove elements and the value decreases below the current treeify threshold? A. It will be converted back to LinkedList(currently: UNTREEIFY_THRESHOLD = 6))

Note: In usages with well-distributed user hashCodes, tree bins are rarely used.

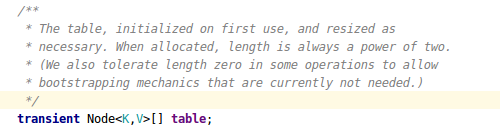

Example :

Let’s assume the hashmap size if 5. The hashcode function is ASCII value offirst character of key. Index function be hashcode is (first character of key)&& (n-1).

You can see, the first character is ‘f’ in both entries. According to the hashcode function and index function, they go into the same bin.

Performance

Two factors that affect the performance of hashmap are: 1. initial capacity 2. load factor.

The capacity is the number of buckets in the hash table, and the initial capacity is simply the capacity at the time the hash table is created. The load factor is a measure of how full the hash table is allowed to get before its capacity is automatically increased. When the number of entries in the hash table exceeds the product of the load factor and the current capacity, the hash table is rehashed (that is, internal data structures are rebuilt) so that the hash table has approximately twice the number of buckets.

As a general rule, the default load factor (.75) offers a good tradeoff between time and space costs. Higher values decrease the space overhead but increase the lookup cost (reflected in most of the operations of the HashMap class, including get and put). The expected number of entries in the map and its load factor should be taken into account when setting its initial capacity, so as to minimize the number of rehash operations. If the initial capacity is greater than the maximum number of entries divided by the load factor, no rehash operations will ever occur.

The default initial capacity of the

HashMap takes is 16 and the load

factor is 0.75f (i.e 75% of current map size). This represents that after

storing the 12th key-value pair into the

HashMap , its capacity becomes

32.

Post a Comment